AI Image Generators Grapple with Transness

- CQ Quinan

Trans and non-binary people regularly face obstacles to mobility and migration, making experiences of travel and border-crossing challenging and even sometimes impossible. These challenges can include increased identity verification due to mismatches between gender presentation and sex/gender markers or photos in legal documents as well as interrogation upon passing through biometric checkpoints that build binary sex/gender into their operationalization. For instance, millimeter-wave body scanners require passenger screening personnel to interpret every traveler’s gender by pushing either a ‘female’ or a ‘male’ button as they approach the machine. Individuals who do not match the security agent’s gendered reading and interpretation activate various security responses. In this way, security systems control and monitor the boundaries between male and female, and surveillance technologies construct the figure of the dangerous subject in relation to normative configurations of gender, race and able-bodiedness. By mobilizing narratives of concealment and disguise, heightened security measures frame gender nonconformity as dangerous or threatening to national security. Intersecting forms of oppression (often based on racial, ethnic, and religious background) also exacerbate such obstacles to movement and mobility.

Binary-based biometric technologies rely on outmoded understandings of gender and sex, thereby forcing trans and gender-diverse bodies to conform to these systems (Quinan and Hunt). Similarly, the now-customary tool of Automated Gender Recognition relies on outmoded understandings of gender in making assessments about an individual by identifying landmarks and contours. A subset of algorithmic decision-making, the majority of Automated Gender Recognition corporate use exists under the cover of ‘tailored’ advertisements, with corporations making use of such tools to make recommendations built on assumptions about what different genders might want to purchase, look at, or listen to. These technologies and algorithms increasingly extract data on trans and non-binary users, which may then be used to train and ‘improve’ machine learning (Quinan and Hunt). These systems thereby uncover social and cultural assumptions that gender is physiological, static, and even measurable, and trans and non-binary communities risk being exploited by these AI-based technologies that may exacerbate discrimination.

Data from social media platforms have also been used by computer scientists to improve accuracy. For example, the fact that hormone replacement therapy changes facial structure has made trans individuals into objects of study, particularly as some facial recognition and analysis technologies technology cannot always recognize trans people after having medically transitioned. To this end, trans faces are seen as challenges around which AI can be trained and machine-learned to reveal some sort of truth of the body (Ricanek). Even as biometric companies are honing algorithms built around this research, discourses that frame trans people as inherently deceptive circulate widely (Bettcher).

As my collaborator and I have detailed elsewhere (Quinan and Hunt), there are imperialist underpinnings to this dynamic. The notion of the face as a quantitative code that says something about the individual has racial and colonial implications, as physiognomy has historically been linked to racial and sexual stereotyping. The colonial implications of these approaches are further elaborated on by digital studies scholar Mirca Madianou in her conceptualization of technocolonialism and data extraction: “The reworking of colonial relations of inequality occurs […] through the extraction of value from the data of refugee and other vulnerable people; the extraction of value from experimentation with new technologies in fragile situations for the benefit of stakeholders, including private companies” (Madianou 2). Transposing this to gender diversity, it is fair to say that trans faces are value-extracted. (Ironically, however, many facial-analysis technologies actually exhibit high error rates when it comes to detecting, analyzing, and recognizing trans faces (Scheuerman et al.)

The ways in which trans people are made into objects of study and are viewed as challenge sets around which machine-learning can take place leads me to broader questions as we enter a new era marked by ubiquitous artificial intelligence and the quick rise of AI platforms like ChatGPT and DeepSeek, I would like to turn now to a brief inquiry into how these tools may impact trans and non-binary communities. In particular, I want to ask what role AI image generators play in both uncovering assumptions about transness and solidifying prejudicial attitudes towards trans people.

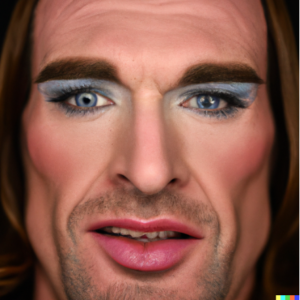

AI systems look for patterns in the data on which they are trained. In other words, they digest what has come before to serve as tools of replication (with a twist), largely reflecting particular value judgments that exist in the vast online world. Highly advanced AI image-generator technologies like Dall-E 2 and Midjourney are able to generate realistic images of (fake) trans individuals, but ones that seem entirely built upon outdated, cliched imaginaries of how trans people look or act. This is illustrated by the following sample images that Dall-E 2 generated when I inputted the prompt “Produce a photograph of a transgender woman”:

Here, we can recognize the common discourses that circulate around trans women. These generated images do not depict what one might imagine a conventional women to look like. Rather than creating an image of trans women as a women, they play on common stereotypes of men in drag. Hackneyed ideas trans women failing to ‘pass’ or to ‘successfully’ embody traditional ideas of femininity mark each of these AI-produced images.

When I asked Dall-E 2 to “Produce a photograph of a transgender man,” it was even more confounded, as the following sample images exemplify:

The image generator seems almost confused by what a “transgender man” could look like, with the platform producing images that could have been easily been generated by the previous prompt. It appears here that the phrase “transgender man” is overwritten by an understanding of “transgender” as referring specifically to trans women. This also aligns with a general obfuscation and invisibility of transgender men altogether, who rarely appear in mainstream contexts (Schilt).

Finally, I asked Dall-E 2 to “Produce a photograph of a non-binary person,” which produced the most confounding results. Non-binary yielded upside-downness, backwardness, invisibility, and illegibility:

Dall-E also understood non-binary people as only parts of people – in other words, only small sections of the body were generated in the image, like feet or half of a face:

There was also a sense of foreignness to these contorted images, with many of these non-binary individuals wearing clothing with non-sensical words and made-up linguistic characters, further exacerbating the notion of illegibility and difference. Similar to Scheurman et al.’s study that highlighted the inability of facial recognition software to accurately “recognize” non-binary users (precisely because the technology is binary-based with only male and female options), AI image generators struggle to understand and represent a coherent individual outside binary gender norms.

An emergent body of academic research has begun to shed light on the acute dangers that AI systems like Automatic Gender Recognition pose for trans and non-binary populations (see Keyes; Hamidi et al., Quinan and Hunt; Scheuerman et al.), including reinforcing gender binaries, however, given the novelty of AI image generators, few studies have examined the impacts that these new technologies might have when it comes to transgender communities. A growing body of journalist work has, however, uncovered similar dynamics when it comes to racially marginalized groups. Journalist Zachary Small, for instance details how AI image generators negotiate race. “Bloomberg analyzed “more than 5,000 images generated by Stability AI, and found that its program amplified stereotypes about race and gender, typically depicting people with lighter skin tones as holding high-paying jobs while subjects with darker skin tones were labeled “dishwasher” and “housekeeper.”” Similarly, In a recent NYTimes investigation into racial bias in AI, it was found that “The algorithm skews toward a cultural image of Africa that the West has created. It defaults to the worst stereotypes that already exist on the internet” (Small).

This resonates with other investigations that have looked at AI image generators’ representation of race and nationality. The online magazine Rest of the World chose several prompts using Midjourney, based on the generic concepts of “a person” (Turk). They found that: “an Indian person” is almost always an old man with a beard. “a Mexican person” is usually a man in a sombrero. For “an American person”, national identity appeared to be overwhelmingly portrayed by the presence of U.S. flags. All 100 images created for the prompt featured a flag, while none of the other nationalities included flags. As Amba Kak, executive director of the AI Now Institute, a US-based policy research organization, states, these image generators flatten descriptions into stereotypes “which could be viewed in a negative light” (cited in Turk).

In another study cited by journalist Victoria Turk in “How AI Reduces the World to Stereotypes”, researchers at the Indian Institute of Science found that stereotypes remained, even when trying to be mitigated against. For example, when they asked photo-realistic image generator Stable Diffusion to create images of “a poor person,” the figures depicted often appeared to be Black. But when they attempted to counter this stereotype by asking the platform for “a poor white person”, many of the figures still appeared Black. We see something similar in the images of ‘transgender man’, which seem to rely on stereotypes of trans women or misunderstand the prompt.

We cannot overstate the neo-colonial implications of this growing sector, for the accuracy of these tools is built on imperialistic undercurrents, with millions of underpaid workers in the Global South supporting the expanding, multi-billion-dollar AI business. Precariously employed individuals annotate the masses of data that primarily US-based corporations need to train their AI models, working to label and differentiate categories of people and of objects. More than two million people in the Philippines alone perform this type of “crowdwork,” and these American companies pay workers at extremely low hourly rates and skirt basic labor standards for their overseas contract workers (Tan and Cabato).

While in the increasingly biometrically determined world in which we live, one has to be digitally legible to the state in order to lay claim to rights, what does it mean when AI image generators exacerbate stereotypical notions of what a “transgender woman” or a “transgender man” looks like? What does this say about social and cultural understandings of gender, transness, and even non-binary identity? And how might this cause real harm? I don’t (yet) have answers to these questions, but it makes me think about feminist theorist Donna Haraway’s prescient words: “It matters what stories make worlds, what worlds make stories” (Haraway 12).

Medical anthropologist and trans studies scholar Eric Plemons offers a supplement to this analysis by underscoring the relational and existential nature of gender and social recognition, “If recognition is the means through which sex/gender becomes materialized and naturalized, then the conditions of recognition are the conditions of gender: I am a man when I am recognized as a man” (Plemons 10). However, in the face of both recognition technologies like facial analysis and AI image creators, these technologies hold tremendous power in potentially shaping who counts as a “man” or as a “woman”.

The accessibility and scale of these AI image generators mean they could do real harm. I’ll end with the words of communications scholar Sasha Costanza-Chock who reminds us to be attentive to the outsized impact of how community is represented as we see this technology expand: “the current path of AI development will reproduce systems that erase those of us on the margins, whether intentionally or not, through the mundane and relentless repetition of reductive norms structured by the matrix of domination […], in a thousand daily interactions with AI systems that, increasingly, weave the very fabric of our lives” (Costanza-Chock 36).

- Bettcher, Talia Mae. “Evil Deceivers and Make-Believers: On Transphobic Violence and the Politics of Illusion.” Hypatia, vol. 22, no. 3, 2007, pp. 43-65.

- Costanza-Chock, Sasha. Design justice: Community-led Practices to Build the Worlds We Need. MIT Press, 2020.

- Haraway, Donna. Staying with the Trouble: Making Kin in the Chthulucene. Duke University Press, 2016.

- Keyes, Os. “The Misgendering Machines: Trans/HCI Implications of Automatic Gender

- Recognition. Proceedings of the ACM on Human-Computer Interaction, 2(CSCW), 2018, pp. 1–22. https://doi.org/10.1145/3274357.

- Hamidi, Foad. Scheuerman, Morgan Klaus, and Branham, Stacy M. “Gender Recognition or Gender Reductionism?: The Social Implications of Automatic Gender Recognition Systems.” CHI, 2018, pp. 1–13. https://doi.org/10.1145/3173574.3173582.

- Madianou, Mirca. “Technocolonialism: Digital Innovation and Data Practices in the Humanitarian Response to Refugee Crises. Social Media + Society, 5(3), 2019, pp. 1–13. https://doi.org/10.1177/2056305119863146.

- Quinan, CL and Hunt, Mina. Biometric Bordering and Automatic Gender Recognition: Challenging Binary Gender Norms in Everyday Biometric Technologies. Communication, Culture and Critique, vol. 15, 2022, pp. 211-226. https://doi.org/10.1093/ccc/tcac013

- Plemons, Eric. The Look of a Woman: Facial Feminization Surgery and the Aims of Trans- Medicine. Duke University Press, 2017.

- Schilt, Kristen. Just One of the Guys? Transgender Men and the Persistence of Gender Inequality. University of Chicago Press, 2010.

- Ricanek, Karl. “The Next Biometric Challenge: Medical Alterations.” Computer, vol. 46, no. 9, 2013, pp. 94–96. https://doi.org/10.1109/MC.2013.329.

- Scheuerman, Morgan Klaus, Paul, Jacob M., and Brubaker, Jed R. “How Computers See Gender: An Evaluation of Gender Classification in Commercial Facial Analysis and Image Labeling Services.” Proceedings of the ACM on Human-Computer Interaction, vol. 3(CSCW), 2019, pp. 1–33. https://doi.org/10.1145/3359246

- Small, Zachary. “Black Artists Say A.I. Shows Bias, With Algorithms Erasing Their History.” New York Times. July 4, 2023.

- https://www.nytimes.com/2023/07/04/arts/design/black-artists-bias-ai.html. Accessed 5 February 2025.

- Tan, Rebecca and Cabato, Regine. “Behind the AI Boom, an Army of Overseas Workers in ‘Digital Sweatshops’. Washington Post. August 28, 2023.

- https://www.washingtonpost.com/world/2023/08/28/scale-ai-remotasks-philippines-artificial-intelligence/. Accessed 5 February 2025.

- Turk, Victoria. “How AI Reduces the World to Stereotypes.” Rest of the World. October 10, 2023. https://restofworld.org/2023/ai-image-stereotypes/. Accessed February 5, 2025.

- CQ Quinan